Do not create multi-accounts, you will be blocked!

Megalo Web Services - Screaming Frog SEO Spider v19.2

Featured Replies

Recently Browsing 0

- No registered users viewing this page.

Latest Updated Files

-

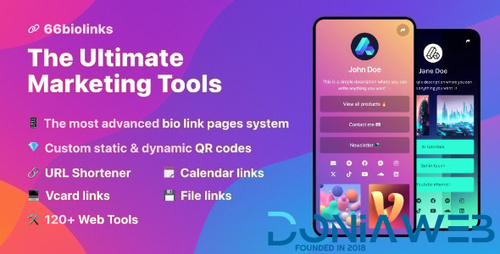

66biolinks - Bio Links, URL Shortener, QR Codes & Web Tools (SAAS) [Extended License]

- 56 Purchases

- 70 Comments

-

Free Fire AI Tournament App Design

- 5 Downloads

- 0 Comments

-

WordPress Automatic Plugin 1904470 By ValvePress

- 4 Downloads

- 0 Comments

-

Enfold - Responsive Multi-Purpose Theme

- 6 Downloads

- 0 Comments

-

ListingPro - Best WordPress Directory Theme

- 21 Downloads

- 0 Comments

-

Gravity Forms reCaptcha Add-On

- 6 Downloads

- 0 Comments

-

Bit Social PRO - Advanced Social Media Schedule & Auto Poster Plugin

- 30 Downloads

- 0 Comments

-

The Events Calendar Pro Event Tickets Plus Addon

- 5 Downloads

- 0 Comments

-

Brave - Drag n Drop WordPress Popup, Optin, Lead Gen & Survey Builders

- 10 Downloads

- 0 Comments

-

Bricks Ultimate - Ultimate Tools for Bricks Builder

- 8 Downloads

- 0 Comments

-

GravityView - Display Gravity Forms Entries on Your Websites

- 7 Downloads

- 0 Comments

-

Woocurrency by Woobewoo PRO

- 4 Downloads

- 0 Comments

-

Woo Product Filter PRO By WooBeWoo

- 11 Downloads

- 0 Comments

-

Gravity Forms Tooltips Add-On

- 1 Downloads

- 0 Comments

-

Gravity Forms Image Choices Add-On By JetSloth

- 7 Downloads

- 0 Comments

-

Gravity Forms Color Picker Add-On

- 1 Downloads

- 0 Comments

-

Gravity Forms Bulk Actions Add-On

- 3 Downloads

- 0 Comments

-

SaleBot - WhatsApp And Telegram Marketing SaaS + Addon + Flutter App for Android and iOS [Extended License]

- 0 Purchases

- 0 Comments

-

Masterstudy - Education WordPress Theme

.thumb.png.3f1bfda123fc58625543809c573aeff2.png)

- 62 Downloads

- 0 Comments

-

Woffice - Intranet, Extranet and Project Management WordPress Theme

- 42 Downloads

- 0 Comments

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.